Appstore Compliance

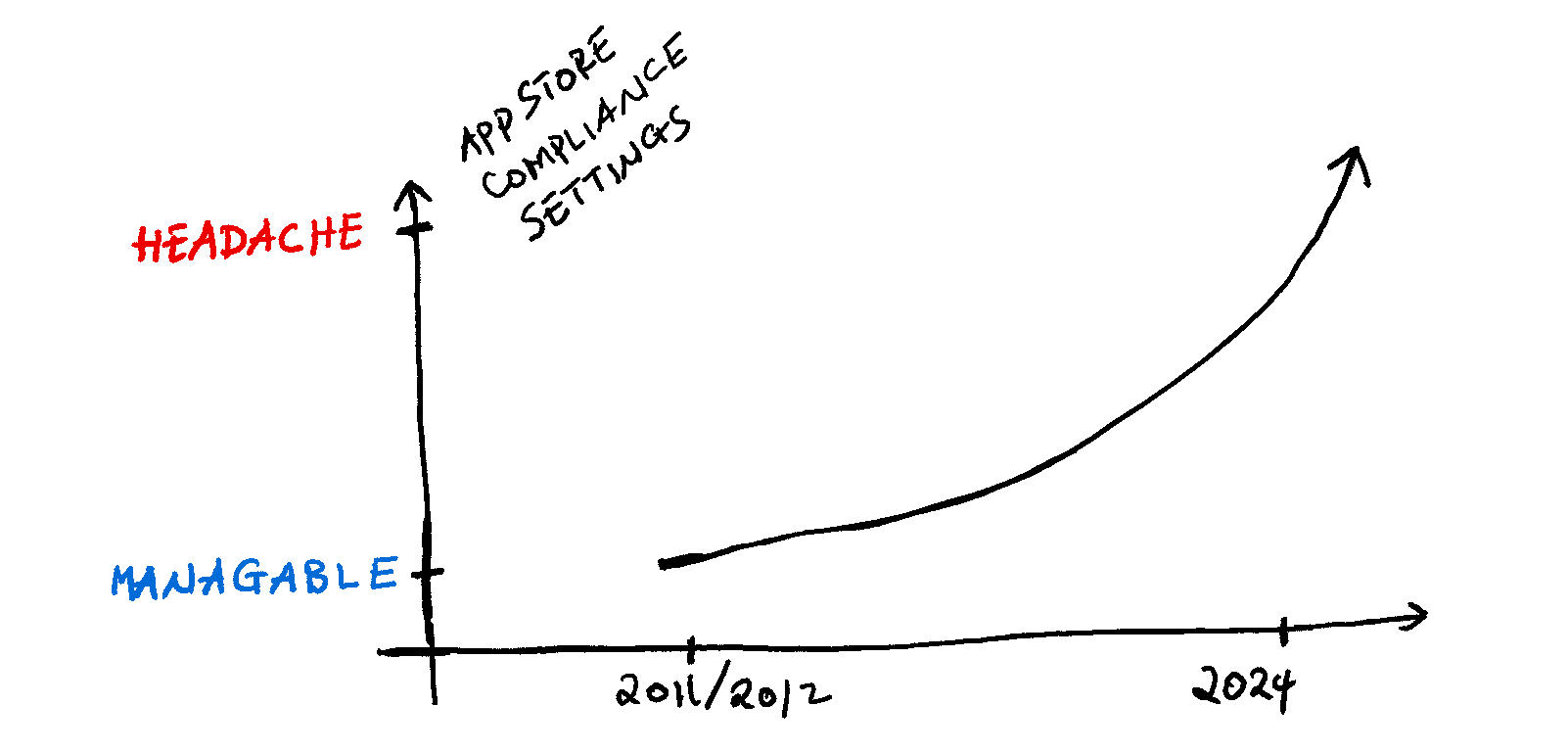

Building an app & submitting it to the app store used to be a trivial process. I have been building apps since 2011/12 and have seen the progression of app store settings morph from single-screen to multi-page declaration forms that require hours to understand and comply. This is especially true if the app handles user data. This shouldn't be a surprise given the torrent of privacy & security news headlines affecting both Apple & Android platforms.

It's almost impossible to upload an app without a privacy policy these days. It's almost impossible to upload an app without filling out a declaration form. This is still true if your app is very basic - Flashlight or Calculator. This is because most apps today use some kind of analytics which forces developers to declare how data is collected and shared.

While we all applaud transparency, it's becoming cumbersome to manage the ever-growing settings and forms one has to fill out just to stay compliant. This headache compounds as we move to multi app store world.

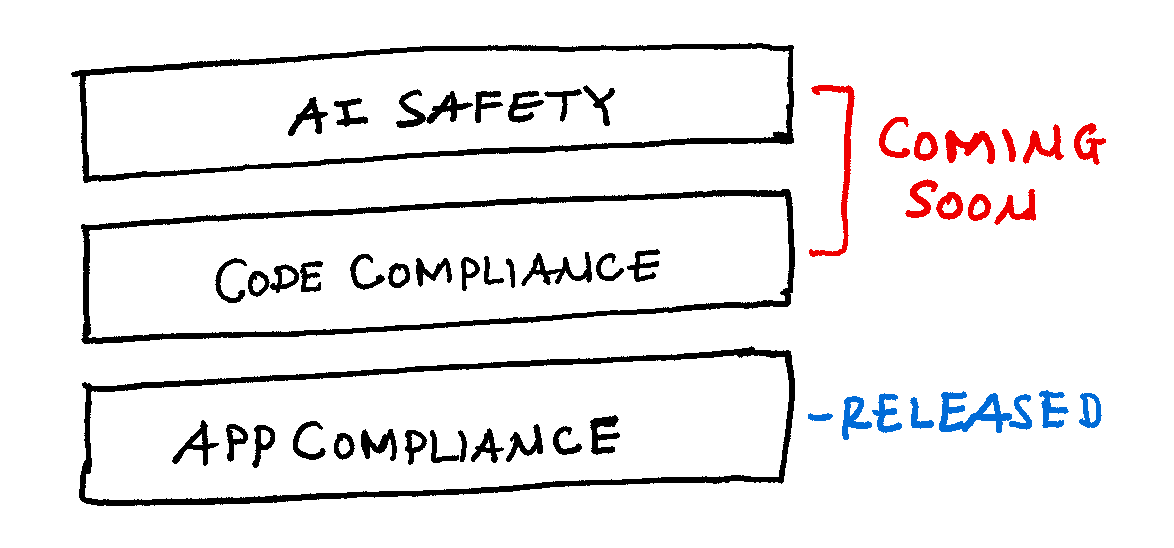

We need Vanta like solution but for mobile apps. Luckily, Google released checks to address this gap. It has three main objectives. App Compliance, Code Compliance & AI Safety. App compliance scans your app for app store compliance requirements and helps you stay up to date. It's an extension of your privacy team (if you have one ;). Code compliance brings down the above analysis to the code level. E.g. Inspect code for location access, data share, etc. AI Safety has not been released yet but from the website copy, it's targeted towards apps that use AI/LLM and the different privacy considerations they need to make.

App Compliance

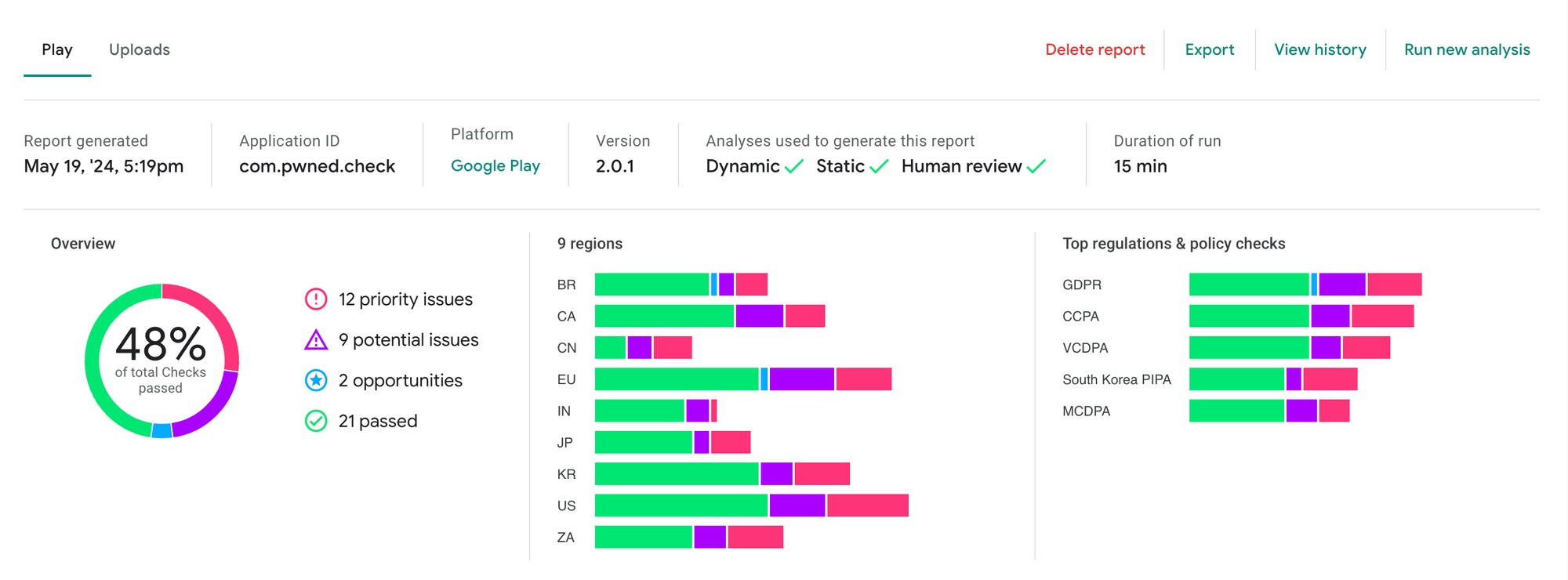

Checks is a free tool for developers and privacy engineers. I created an account and proceeded to upload an app. Checks can directly take your app from PlayStore or upload APK/IPA binaries directly from the console. I connected Pwned Report app and was able to generate the report below.

Here Checks has done three types of scans. Static, Dynamic & Human review 🤔. The first two are widely used techniques to analyze apps but the latter is little surprising. It's a bit tricky to scale human review given there will potentially be numerous scan requests. Another challenge is to fully analyze an application's paths. An app might require account signup while some other functionalities are triggered by other applications or system events limiting checks to fully map application pathways. There are many ways to solve this problem, and with Google's AI, they can reduce it to a manageable level. Earlier this year, I wrote about this problem and how GenAI can help.

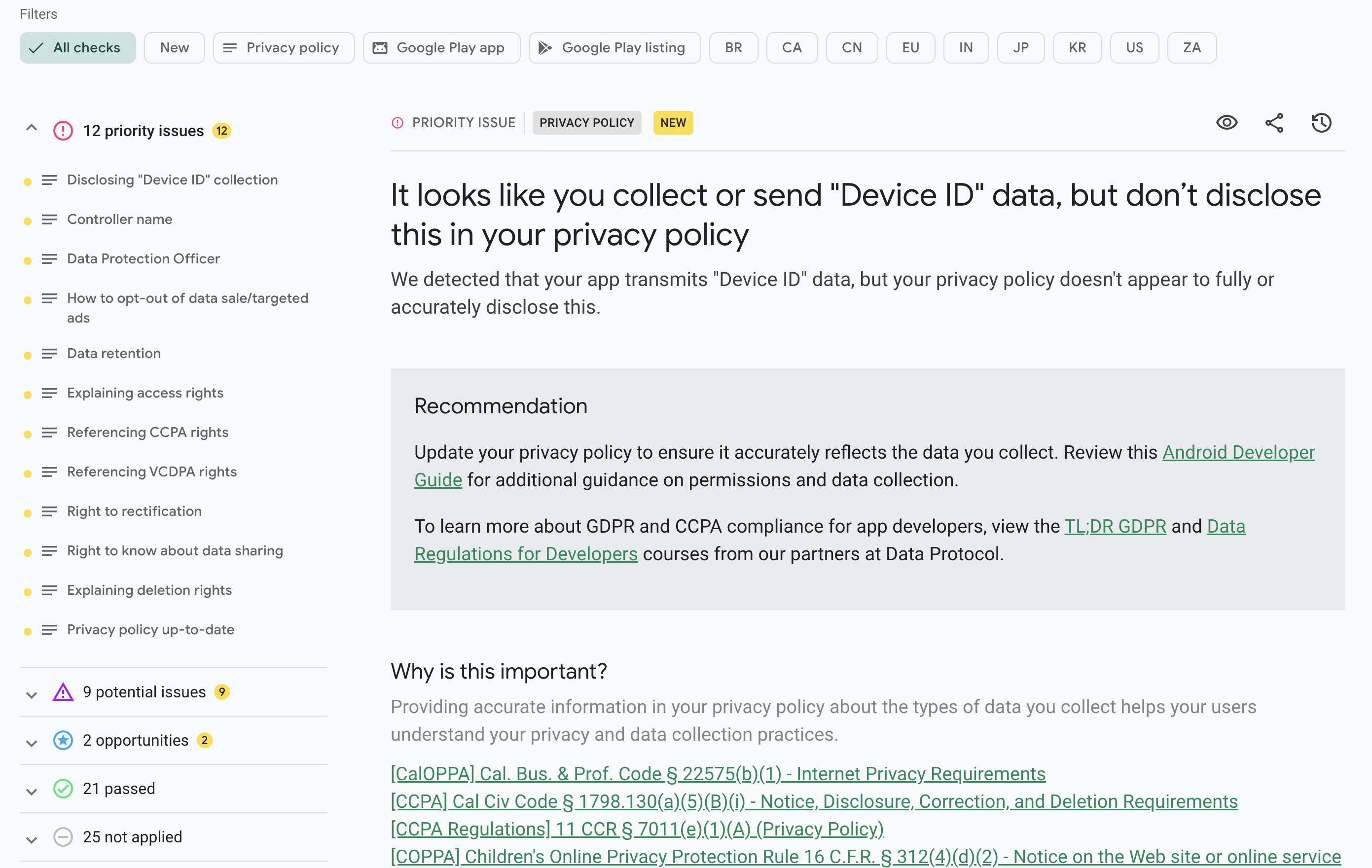

As you can see, the app has 12 priority issues and few others that require some attention. You can filter these issues by region & regulation. E.g. GDPR, CCPA, VCDPA, etc. Each issue will have a detailed description. E.g. Checks was able to see device ID was transmitted but never fully mentioned in the privacy policy. "It looks like you collect or send "Device ID" data, but don’t disclose this in your privacy policy"

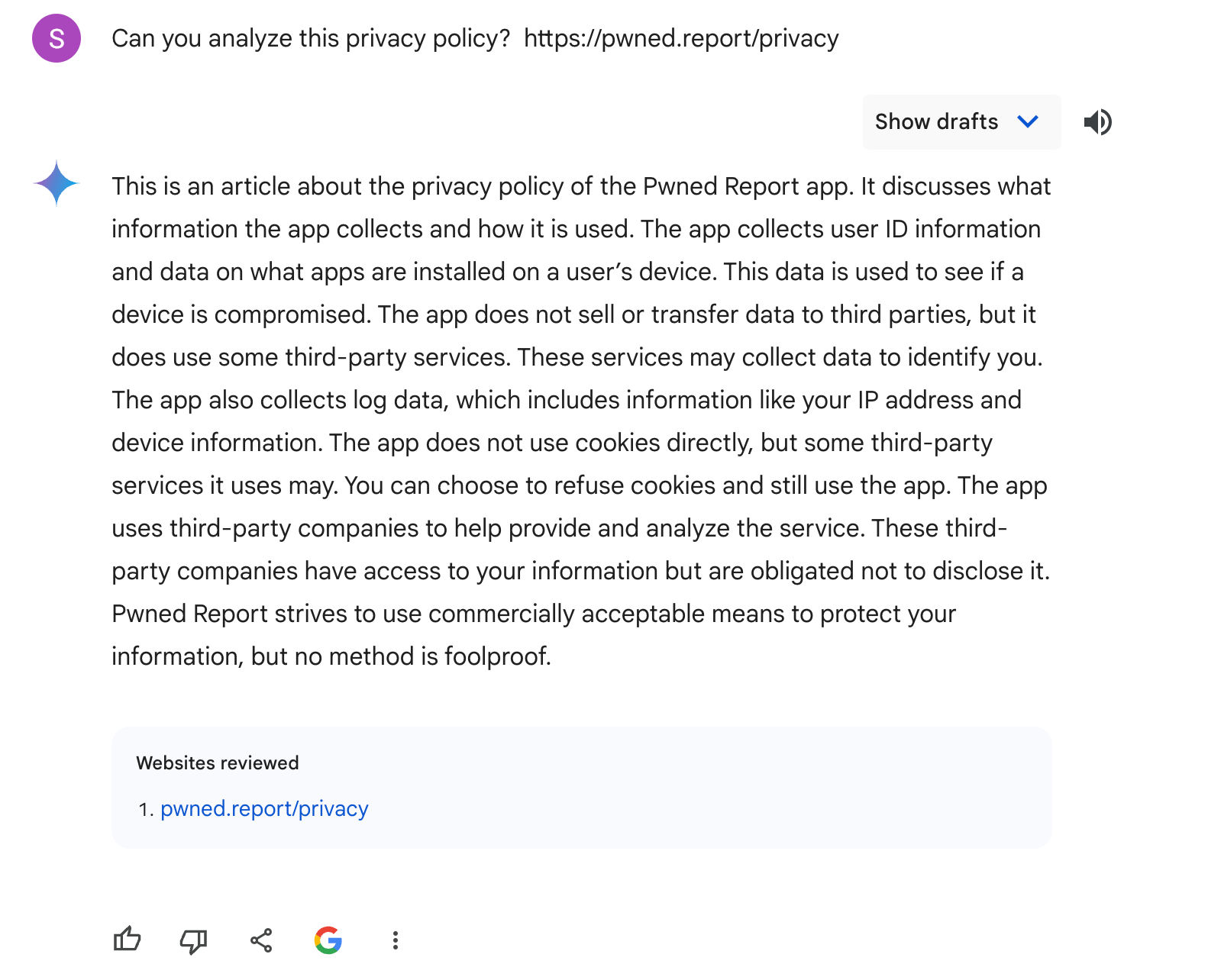

I assume Checks downloads the privacy policy and runs it through Gemini to see if various privacy controls exist. As an experiment, I directly asked Gemini to analyze Pwned Report's privacy policy. The initial answer was spot on while a few follow-up questions resulted in incorrect answers. E.g. I asked if device Id was properly disclosed, Gemini incorrectly said yes while Checks correctly answered no. Hence, checks might not be using Gemini directly ¯\_(ツ)_/¯

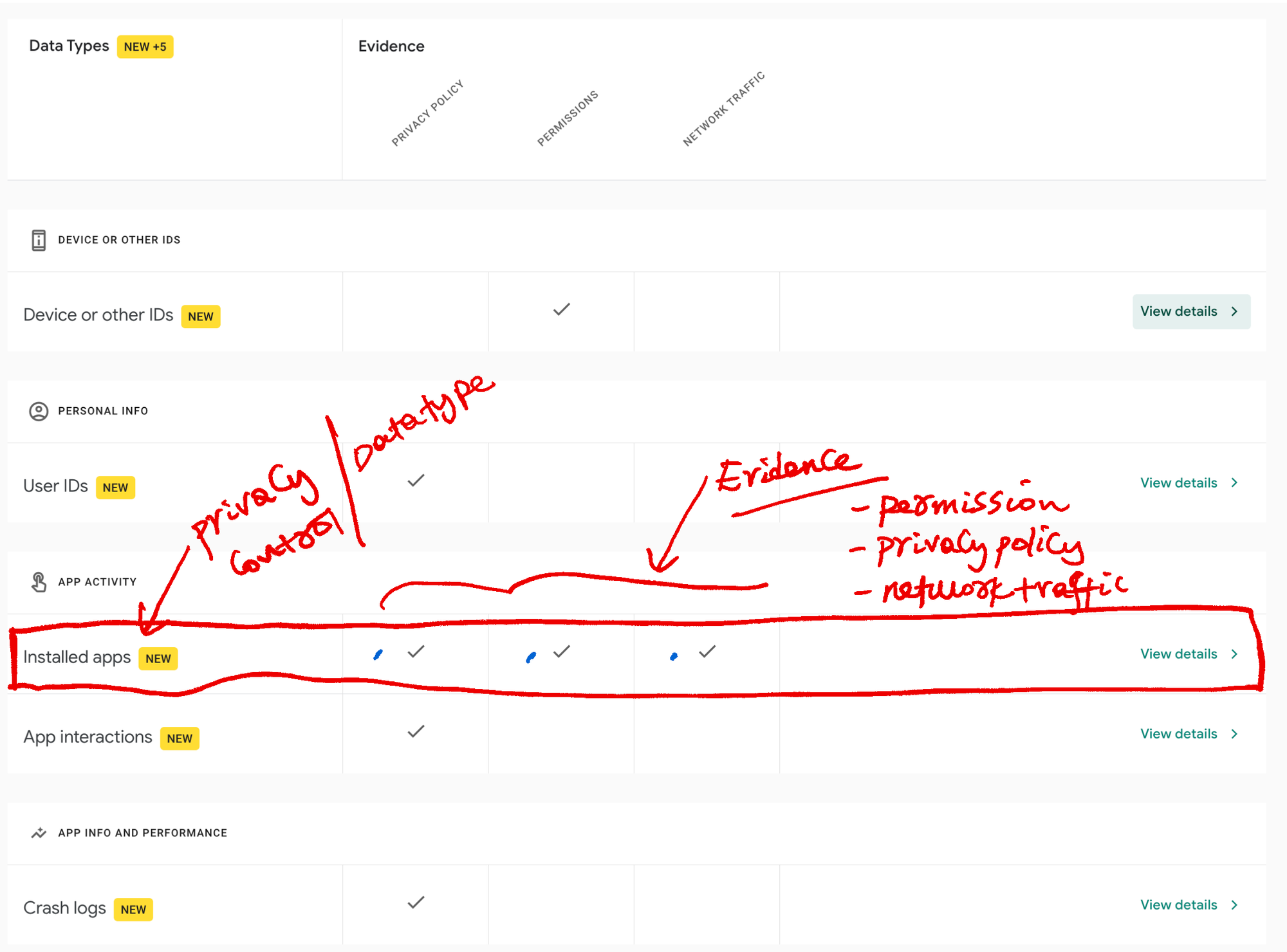

There are other sub-sections that analyze endpoints (APIs the app connects to), third-party SDKs, permissions, etc. While these are awesome, the most consequential section is the Data Safety Section. This section must be filled by almost all applications that make it to the store. It defines what data is used, how it is used & how it is shared. Improperly declaration can boot you off the store. Google claims it's using NLP to understand your privacy policy and map it to the different data types defined under data safety.

Code Compliance

This feature is not released yet. However, it claims to do code analysis on the device. This means Google is utilizing Gemini Nano LLM which doesn't involve sending source code to Google.

AI Safety

This feature is also coming soon. It's not clear what kind of privacy controls will be included. Google is trying to help AI developers release their models responsibly from the start by aligning the app's implementation with custom different policies and regulations. We shall see

That's it for this week.

Cheers

Sandbox Brief Newsletter

Join the newsletter to receive the latest updates in your inbox.