Apple Intelligence - What we know so far

Apple intelligence was introduced back in June. This was somewhat expected given the explosive growth of LLM models everywhere spearheaded by Chat-GPT. Google followed up by releasing Gemini Nano optimized for mobile devices. In this post I will summarize (in short ;)) what I gather so far from some of the marketing materials released by apple and bits and pieces mentioned in the keynote and in some of WWDC sessions.

Lineup

- iOS

- 3B On-Device Model

- macOS

- On-Device Code Completion Model

- Cloud

- 130B-180B Foundation Model*

- Swift Assist

iOS

The model is built upon AXLearn framework using both licensed and public data. The latter is powered by Apple bot which website owners can opt out off. This rubs the wrong way as Apple touts it self as privacy conscious company. However, apple had to build a system where PII and other sensitive information is removed from the data corpus before it's fed to the training pipeline

Specs

- Vocabulary Size of 49k

- 0.6 milliseconds prompt token

- Speed, 30 tokens/sec

macOS

Looks like apple is utilizing on-device model for code completion. Similar to how copilot does it but built inside Xcode. This is good news for folks that have privacy concerns. However, other tasks such as exploring framework, writing test, generating code is going to hit their cloud instance. They call this Swift Assist.

Begs the question why Assist wasn’t part of the on-device model given they have done optimization in macOS Sequoia

Cloud

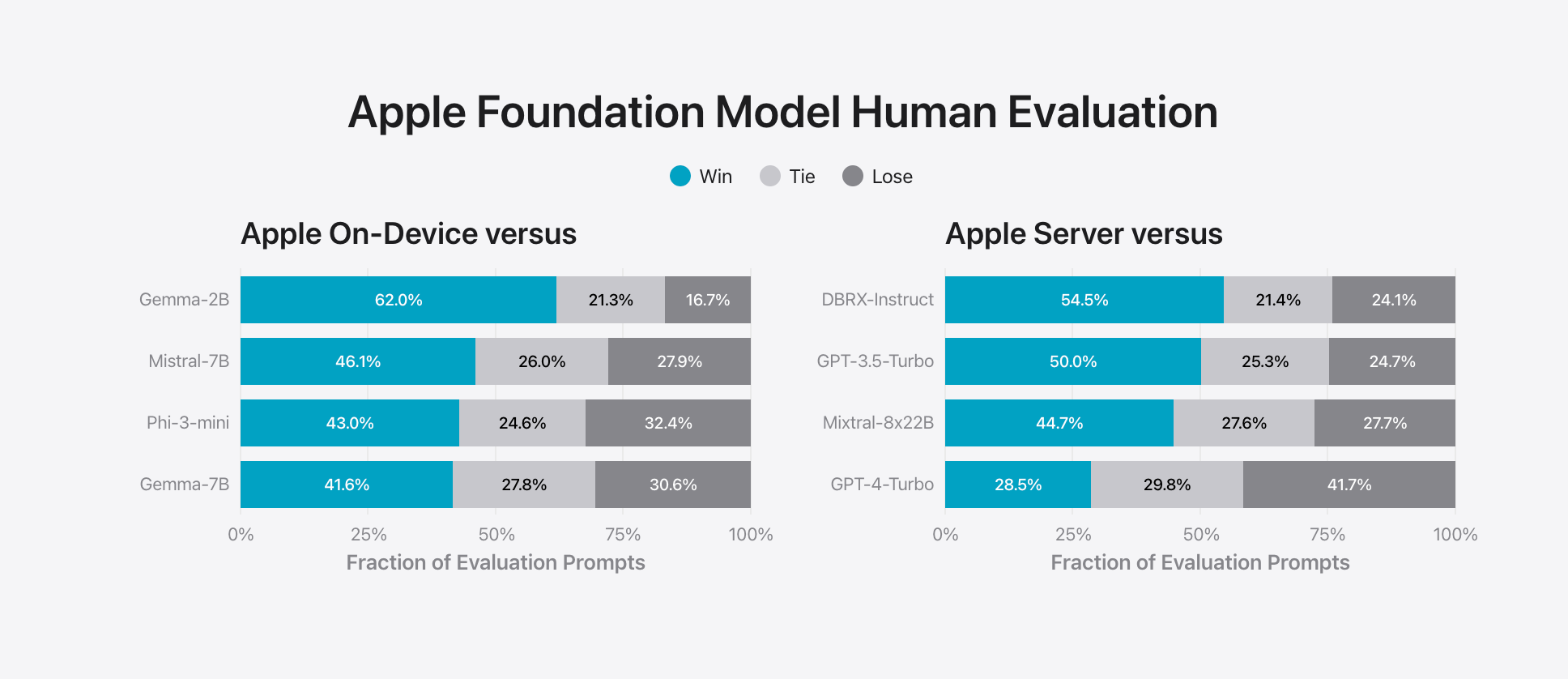

For tasks that can’t be handled by a device, a large model operates on Apple Silicon Servers within their Private Compute Cloud. This model, comparable in size and functionality to GPT-3.5, is probably implemented as a Mixture-of-Experts. Apple released multiple benchmark numbers that shows its model performing better than its competitors.

Sandbox Brief Newsletter

Join the newsletter to receive the latest updates in your inbox.